Author(s): Jeremiah Ratican and James Hutson*

The conventional methodology for sentiment analysis within large language models (LLMs) has predominantly drawn upon human emotional frameworks, incorporating physiological cues that are inherently absent in text-only communication. This research proposes a paradigm shift towards an emotionallyagnostic approach to sentiment analysis in LLMs, which concentrates on purely textual expressions of sentiment, circumventing the confounding effects of human physiological responses. The aim is to refine sentiment analysis algorithms to discern and generate emotionally congruent responses strictly from text-based cues. This study presents a comprehensive framework for an emotionally-agnostic sentiment analysis model that systematically excludes physiological indicators whilst maintaining the analytical depth required for accurate emotion detection. A novel suite of metrics tailored to this approach is developed, facilitating a nuanced interpretation of sentiment within text data, which is paramount for enhancing user interaction across a spectrum of text mining applications, including recommendation systems and interactive AI characters. The research undertakes a critical comparative analysis, juxtaposing the newly proposed model with traditional sentiment analysis techniques, to evaluate efficacy enhancements and to substantiate its application potential. Further, the investigation delves into the short-term memory capabilities of LLMs, exploring the implications for character AI roleplaying interfaces and their ability to recall and respond to user input within a text-driven emotional framework. Findings indicate that this emotionally-agnostic sentiment analysis approach not only simplifies the sentiment assessment process within LLMs but also opens avenues for a more precise and contextually appropriate emotional response generation. This abstracted model, devoid of human physiological constraints, represents a significant advancement in text mining, fostering improved interactions and contributing to the evolution of LLMs as adept analytical tools in various domains where emotional intelligence is crucial.

The nascent field of sentiment analysis, which endeavors to computationally discern and categorize affective states conveyed in text, is approaching a transformative epoch facilitated by the proliferation of large language models (LLMs). These computational behemoths, exemplified by OpenAI’s ChatGPT 3.5 and 4 iterations, have reached milestones in producing text with an unprecedented degree of linguistic sophistication and contextual relevance, simulating nuanced dialogues that bear striking resemblance to human exchanges [1]. These technological leaps have not only broadened the horizons of possible applications across diverse sectors such as healthcare, education, and assisted technologies but have also underscored the imperatives for advancements in sentiment analysis to complement the emotional depth of human-AI interaction [2-5].

This surging tide of interest in emulating human cognitive complexity encompasses a significant branch that critically examines the integration of emotional intelligence within AI systems. Existing research underscores that the learning algorithms undergirding LLMs possess the potential to reflect a spectrum of personalities and emotional responses fostering more profound user interactions and potentially elevating the user experience to new pinnacles of personalization [6, 7].

The present research trajectory anchors on an intellectually rigorous integration of large language models with emotionally agnostic text mining, a perspective resonating with modular mind theory. laid the initial theoretical groundwork, suggesting the compartmentalization of cognitive processes into discrete, functional modules within the brain. expanded upon this, advocating for the parallel in artificial intelligence constructs, presenting a compelling case for modular representations within AI systems [8, 9].

Against this conceptual backdrop, the current study advances the dialogue by proposing an emotionally agnostic framework for sentiment analysis. This framework seeks to refine text mining in LLMs by eliminating physiological cues inherent to human emotion, focusing purely on textual information to derive sentiment. This emotionally neutral approach envisages a more nuanced understanding of textual data, unencumbered by the complexities and biases introduced by human emotional expression. The integration of such an emotionally agnostic sentiment analysis with the robust capabilities of LLMs represents a nascent frontier with profound implications for the future of AI and human-AI interaction.

Expanding on a formidable theoretical foundation and an expanding corpus of empirical research, the current article aspires to chart a new course in the emulation of human cognitive complexity by converging LLMs with autonomous agents. This synthesis, predicated on the conceptual pillars of the modular mind theory, aspires to enhance the dialogue within the intersecting spheres of artificial intelligence, psychology, and neuroscience. Grounded in the seminal work of which underscored the capability of autonomous agents to reflect human behaviors through the integration of personal motives and preferences, the current study proposes an avant-garde model that meticulously replicates the nuanced facets of mental processing [10].

The scope of this article extends beyond the conventional frameworks of sentiment analysis, advocating for an approach that meticulously separates emotional biases from the interpretation of textual data. The endeavor here is to engender a model that aligns more closely with the principles of emotion-agnostic text mining, thereby promoting an objective stance in sentiment analysis within the realm of LLMs. Such a model is anticipated to provide a richer, more accurate analysis of text by disentangling the intricacies of human sentiment from the underlying communicative intent. Furthermore, the study argues for the potential of LLMs to advance sentiment analysis by adopting a text-centric perspective that eschews human-like emotional biases. By constructing a synthetic analog of human cognition, partitioned into distinct modules for different aspects of mental processing, the study articulates a novel methodology that is hoped to be instrumental in advancing the field.

The aspirations of this work are manifold: to provide an innovative methodological approach that can be adopted by future research endeavors; to leverage the resultant insights for the development of more refined, emotionally intelligent AI systems; and to potentially uncover new dimensions of human cognition through the lens of artificial constructs. Thus, the proposed model seeks not only to elucidate the functionalities of LLMs in mimicking human cognitive processes but also to serve as a vanguard for subsequent technological innovations that straddle the domains of cognitive science and artificial intelligence. Through this synthesis of theoretical exploration and empirical validation, the article endeavors to pioneer an augmented pathway for understanding and replicating the vast complexities of human sentiment and cognition.

The scholarly investigation into the nexus of large language models (LLMs) and sentiment analysis within the literature unveils a nuanced conversation concerning the development of emotionally intelligent AI, as well as the psychosocial and ethical dimensions such developments entail. Researchers have posited that emotional acuity plays a significant role in intelligent behavior and decision-making, which traditionally has been integrated into AI systems to reflect more human-like interactions [11, 12]. For example, Mahmud illuminate the significance of emotions as a signaling system that informs intelligent decision-making, thereby integrating emotional awareness into the computational fabric of AI. The grafting of these emotional components onto AI has marked a turning point in the AI evolutionary trajectory [13].

Frameworks advocating for AI systems to be endowed with social and emotional faculties have been presented as essential developments for fostering more intricate human-machine interactions [14, 15]. Observations by Samsonovich suggest that the embodiment of empathy within artificial entities can lead to more genuine social exchanges, supporting the notion that emotional intelligence within AI could enhance interpersonal dynamics. This is particularly relevant in the context of developing communication systems that leverage affective computing to interpret and reproduce emotional states, leading to a range of applications from empathetic chatbots to sector-specific user interfaces [16, 17].

The interplay between ethical considerations and the practical deployment of AI with emotional dimensions is a topic of vigorous debate among scholars [18,19]. The ethical quandary regarding the simulation of human emotions by machines, as posited by Pusztahelyi underscores the urgent need for a moral framework within which emotionally intelligent AI operates. Furthermore, the pursuit of affective computing has gradually shifted towards developing more sophisticated cognitive models that aim to accurately embody emotional intelligence within AI systems, indicating a sustained commitment to refine these emergent technologies [20].

Pivoting from these established insights, the present review explores the emerging paradigm of emotion-agnostic text mining within LLMs, positing a novel approach wherein the analysis of textual data is decoupled from human-like emotional biases. This literature review aims to synthesize current research that informs the design and implementation of LLMs capable of such emotion-agnostic sentiment analysis, framing a dialogue that interrogates the potential and limitations of current technologies, while exploring the future trajectory of emotionally intelligent AI absent of anthropomorphic emotional frameworks.

The literature indicates that the healthcare sector stands as a particularly promising arena for the application of emotionally intelligent AI systems. Tools for emotion recognition are posited as beneficial adjuncts to medical professionals, assisting in nuanced aspects of patient care and interaction [21]. Such applications, however, are not without their ethical considerations, particularly regarding the degree of autonomy these AI systems are granted in decision-making processes, a concern highlighted by [22]. Moreover, Andersson introduces a critical discourse on the potential implications for fundamental human rights, such as the right to freedom of thought, in the deployment of AI systems endowed with emotional intelligence [23].

The compendium of existing scholarship offers a comprehensive portrait of the multifarious aspects of emotional intelligence within AI: from foundational technological capabilities and beneficial integrations into cognitive systems, to frameworks designed to foster socio-emotionally adept AI. This is augmented by research into the communicative functions of AI, the progression of synthetic emotional intelligence, and the ethical considerations associated with the simulation of emotions by machines. The inclusion of healthcare-related applications and the legal intricacies that these technologies may encounter presents a panoramic overview of the advancements and future directions in the field of emotionally intelligent AI.

In addition to healthcare, the domains of video gaming and complex decision-making processes represent burgeoning fields of interest within the scholarly community. Pioneering studies by have delved into the implementation of reinforcement learning to train AI agents for adaptive gameplay [24, 25]. These foundational studies elucidate methods by which AI can learn and evolve within specific game environments. Moreover, research by supports the proposition that artificial agents can serve as proxies for human cognitive processes, particularly in the context of decision-making in games [26, 27]. Provide evidence that generative personas can yield behavioral outputs that closely mirror those of human players, underscoring the capacity of AI to effectively simulate complex human decision-making [27].

Within this research landscape, the current article seeks to explore the prospects of LLMs functioning within a framework that is agnostic to emotional biases. The aim is to dissect and understand the mechanisms by which LLMs can engage in sentiment analysis without emulating emotional responses. This exploration is rooted in the premise that such a development could address some of the ethical concerns presented by emotionally intelligent systems while maintaining their functional benefits in various sectors, including healthcare and gaming. The literature review thus sets the stage for a critical analysis of the potential for LLMs to operate within an emotionally neutral paradigm.

The burgeoning field of video game testing and evaluation has seen significant contributions from AI agents, a trend underscored by the work of [28], who have demonstrated the capability of synthetic agents to match human testers in the identification of software anomalies. Notwithstanding these advances, have identified a limitation in the form of a lack of behavioral diversity among agents, which can lead to predictability in their actions. This issue is echoed in subsequent research, with exploring the expansive possibilities for AI in video games, and addressing the intricate process of automating player character behavior [28-33].

In parallel, there has been a discernible shift towards the application of generative AI models such as ChatGPT across scientific and medical disciplines. The work of through extensive interviews has shed light on the potential for generative AI to expedite scientific breakthroughs. Similarly, propose that these models could refine statistical process control; however, they concurrently signal the risks of misuse and misinterpretation inherent in early-stage technologies. Further elaborate on the application of generative AI in spinal cord injury research, evidencing its efficacy in generating virtual models and enhancing medical procedures [34-36].

Recent breakthroughs have elucidated the potential of generative AI to embody a range of personas within virtual settings. A notable study by Park has contributed to this dialogue by engineering a roleplaying game environment inhabited by AI agents, each characterized by distinct personal narratives and social interplays. Employing the ChatGPT API, these researchers developed an advanced simulation framework featuring agents with individualized memories and life stories. When subjected to evaluations of behavior authenticity, these AI-driven simulations demonstrated a higher degree of realism compared to human participants engaging in role-play. Yet, the authors prudently highlight potential ethical dilemmas, such as the emergence of parasocial interactions and excessive dependence on AI constructs [10].

Synthesizing these diverse strands of investigation, it becomes evident that generative AI agents are increasingly integrated into a spectrum of domains, from entertainment to scientific inquiry and healthcare. Their proficiency in emulating complex human behavior is recognized, but is accompanied by explicit calls for responsible utilization. Particular attention is drawn to the ethical dimensions of such interactions, with an emphasis on maintaining a balance between AI advancements and human welfare. Thus, the scholarly narrative acknowledges the transformative nature of generative AI, while simultaneously advocating for a deliberate approach to understanding and addressing the challenges associated with their integration into human-centric domains.

Regarding the limitations of current approaches to large language models for sentiment analysis, the literature hints at several gaps [37]. These include challenges in understanding contextual nuance, detecting sarcasm, and the potential reinforcement of biases present in training data [38]. Although large language models like ChatGPT have made significant strides in text generation and comprehension, their capacity for sentiment analysis remains an area ripe for further refinement. Current models may struggle with the subtleties of human emotion, often misinterpreting or failing to detect the complex layers of sentiment embedded in natural language [39]. This indicates a need for continuous enhancement in algorithmic sophistication and a broader ethical dialogue on the deployment of such technologies in sectors where emotional intelligence is paramount.

The burgeoning domain of sentiment analysis within data mining is primed for transformation through the innovative application of LLMs like GPT-4. Traditional sentiment analysis methods frequently integrate affective computing, which attempts to detect and interpret human emotions through text [40]. However, these methods often rely on physiological or experiential data which may not be available or appropriate in textual analysis [41]. Therefore, a novel approach has been proposed that focuses exclusively on the textual elements of user input, eschewing the need for humanbased emotional models [35].

The proposed strategy posits that by analyzing text through a purely linguistic lens—stripping away the influence of physiological cues—one could discern a more nuanced spectrum of sentiment. LLMs, equipped with the capability to process and generate text, are uniquely positioned to leverage such a strategy. These models can be fine-tuned to identify and categorize sentiments into emotional archetypes or categories, thereby enabling a deeper understanding of textual sentiment without reliance on physical emotional indicators. The proposed methodology would entail creating a framework within LLMs that can classify sentiment across a range of finely grained emotional categories. The system would quantify these sentiments, providing a nuanced emotional profile of textual input. Such a framework could draw upon a database of text-based interactions, eschewing the need for emotional labels derived from human annotators, which often incorporate subconscious biases related to physiological responses.

Furthermore, the model would be trained to discard any physiological elements that may confound the sentiment analysis, relying solely on text-driven cues. This is a departure from models that mimic human emotional responses, which often incorporate physical responses that are irrelevant in a text-only context. As these advanced models interact with users, they would use metrics to refine sentiment analysis, focusing exclusively on language use patterns, semantic structures, and syntactic cues. The practical implications of this methodology are extensive. For instance, in customer service chatbots, this approach would enable a more accurate understanding of customer feedback by analyzing the text for sentiment without misconstruing the absence of physiological indicators as a lack of emotional content. The system would, theoretically, provide a more precise sentiment analysis by recognizing the subtleties in language that indicate sentiment, even when traditional emotional cues are not present.

The advancement of sentiment analysis through emotionally-agnostic text mining in LLMs promises a more refined understanding of textual sentiment. It provides an innovative approach that could significantly enhance the accuracy of sentiment analysis across various applications, from customer service to literary analysis. The recalibration of sentiment analysis methodologies to focus purely on textual data could pave the way for more objective and reliable analyses, free from the constraints and potential biases introduced by human emotional interpretations.

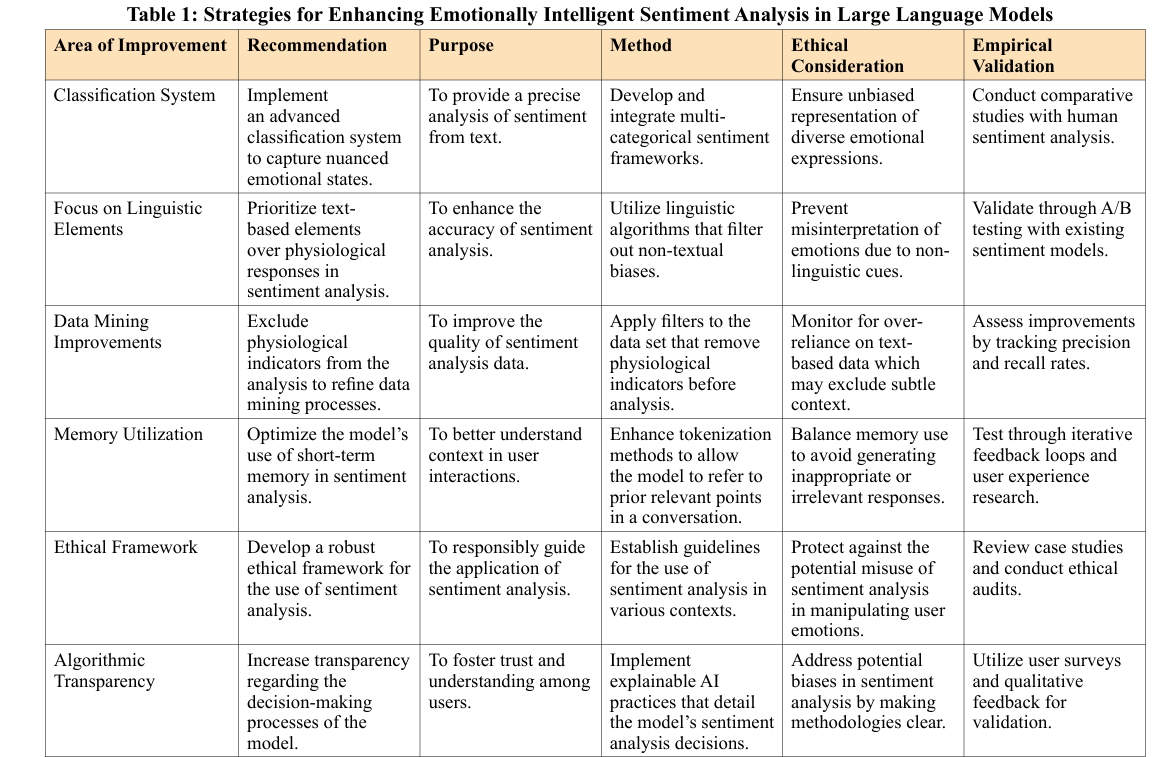

In the quest to emulate the complex modular architecture of human cognition, several key recommendations emerge for enhancing the capabilities of LLMs (Table 1). Foremost, an advanced classification system is warranted to capture the subtleties of emotional states through text-based interaction. Such a system must transcend the current dichotomous sentiment frameworks to discern finely grained emotional nuances, thereby providing a more precise analysis of sentiment from textual communication.

Additionally, the current sentiment analysis models must pivot towards non-physiological metrics that eschew reliance on the biological markers of emotion, focusing exclusively on the linguistic elements present in text. This shift requires a reevaluation and recalibration of algorithms to better interpret the subtleties and complexities of language as indicators of sentiment, divorced from the non-verbal cues that are imperceptible in digital communication.

An executive module, an element of the AI architecture analogous to human cognitive oversight functions, must be engineered to manage the coordination and integration of various cognitive modules within LLMs. This executive module would harmonize the disparate aspects of sentiment analysis, ensuring that the output is both coherent and contextually relevant. Addressing the limitations inherent in the token-based memory of LLMs like ChatGPT, efforts to enhance the model’s ability to reference prior interactions would significantly augment the continuity of conversation and the consistency of sentiment tracking. Such improvements would mimic more closely the human capacity for short-term recall, thus supporting a more authentic dialogue.

For these systems to remain relevant and accurately attuned to the evolving lexicon of human emotion, an inherent capacity for continuous adaptation and learning is imperative. This dynamic quality would ensure that models like GPT-4 remain sensitive to novel expressions of sentiment and linguistic shifts over time. The ethical deployment of such advanced sentiment analysis tools cannot be overstated. A responsible application of these technologies mandates vigilant oversight to mitigate potential biases and guarantee interactions that uphold the principles of respect and empathy.

Finally, the empirical validation of these advancements is essential. Rigorous testing, including comparative studies with human subjects, should be conducted to establish the efficacy of text-based emotion categorization frameworks. Such research would not only substantiate the theoretical underpinnings of these models but also pave the way for their practical application across various domains. Therefore, the full potential of LLMs for sentiment analysis is yet to be realized. By adopting these recommendations, researchers and practitioners can unlock new dimensions of understanding in the emotional landscape of human-machine interaction, enhancing the granularity with which machines can interpret and respond to human sentiment through text.

The discourse on sentiment analysis within large language models (LLMs) has reached a pivotal juncture with the introduction of emotionally-agnostic text mining techniques. These techniques represent a paradigm shift from conventional sentiment analysis approaches that often incorporate a hybrid of textual and physiological indicators to interpret human emotions. The proposed model, with its innovative reliance solely on textual information, stands to redefine the parameters of sentiment analysis, aligning it more closely with the nature of text-based communication.

The principal takeaway from the current discussion is the identification of the potential that lies in decoupling sentiment analysis from the traditional reliance on human emotion models that include physiological elements. By extracting sentiment purely from text, the proposed model promises to deliver a more nuanced and intricate understanding of textual sentiment, thus avoiding the pitfalls of misinterpretation that often accompany the inclusion of physiological responses.

The benefits of such a text-centric approach to sentiment analysis are multifold. First, it enables a more objective analysis by eliminating the subjectivity inherent in interpreting physiological cues. Second, it facilitates the handling of large datasets where emotional labeling by humans is impractical. Third, it ensures consistency and scalability in sentiment analysis, essential for applications such as market analysis, public opinion mining, and automated customer service.

Future research should focus on developing a robust framework for implementing emotionally-agnostic sentiment analysis within LLMs. This will likely involve the creation of comprehensive linguistic models that can accurately parse and interpret the emotional valence of text without external emotional labeling. Empirical validation of the model’s efficacy compared to traditional sentiment analysis methods will be imperative.

In terms of implementation, the transition to emotionally-agnostic sentiment analysis will necessitate the refinement of LLMs to accommodate the new framework. Industry and academic partnerships could be pivotal in testing and refining these models in real-world scenarios. Finally, ethical considerations, particularly around the transparency and fairness of algorithmicallyderived sentiment analysis, must guide the deployment of these technologies.

In all, the proposed model of sentiment analysis through emotionally-agnostic text mining augurs a significant advancement in the field of artificial intelligence and computational linguistics. Its successful implementation could revolutionize the way sentiment is gauged and interpreted across vast swathes of text, offering a more reliable, consistent, and scalable solution [42-99].

Data Availability Data available upon request.

Conflicts of Interest

The author(s) declare(s) that there is no conflict of interest regarding the publication of this paper.

Funding Statement

NA

Conceptualization, J Ratican; Methodology, J Ratican; Validation, J Hutson; Investigation, J Hutson – Original Draft Preparation, J Hutson; Writing – Review & Editing, J Hutson.; Visualization, J Hutson.