Author(s): Aakash Aluwala

Effective network monitoring is crucial for maintaining performance and security. Traditionally, tools use threshold-based methods for anomaly detection but struggle to detect complex patterns in modern dynamic networks. This paper investigates leveraging machine learning to augment monitoring capabilities. Key network monitoring tools are described along with how they currently handle anomaly detection. Machine learning techniques for developing predictive models from historical data are then discussed. A framework for integrating trained models as add-ons to existing tools is proposed. These AI-driven approaches are shown to provide more accurate and automated anomaly detection compared to legacy techniques.

Reasons for network monitoring include compliance with service level agreements, improving performance, and increasing security. Network administrators use tools for monitoring to identify problems and diagnose them [1]. Historically, these tools work with rules and thresholds to analyze traffic and find deviations. However, modern networks deal with enormous, heterogeneous traffic originating from numerous sources. They also note that new threats reemerge continuously. This makes it difficult for traditional tools to maintain pace and identify such patterns.

Network monitoring is given new possibilities by artificial intelligence. Machine learning for instance can assess large volumes of traffic to detect complex patterns and irregularities on its own [2]. They need not be set manually and can learn from new conditions arising in the future through machine learning. AI- based approaches when incorporated with the existing monitoring frameworks have the capability of transforming the approach of anomaly detection. The aim of this paper is to examine how AI is improving network monitoring. It outlines the conventional methods of monitoring and their drawbacks.

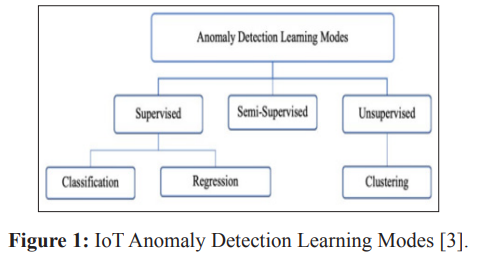

There have been many investigations in the context of using machine learning and AI for anomaly detection in different fields including network monitoring. Some of the techniques that have been discussed include clustering, isolation forest, autoencoder and recurrent neural network. Alamri R, et al. carried out a study to review machine learning and deep learning techniques for anomaly detection in IoT data streams [3]. It categorizes approaches based on data type, anomaly type, detection method, windowing model, available datasets and evaluation measures. Techniques covered include LOF, AutoCloud clustering, TEDA clustering, Bayesian models and HTM. Discussed deep learning techniques including convolutional and LSTM, autoencoder and SNN. It also handles challenges of evolving data, high dimensionality, online learning and performance.

In general, the paper discusses the current research in anomaly detection for IoT data streams using various approaches and points out emerging issues.

Clustering is one of the most used algorithms in the early stage of anomaly detection. In the study by Wang L, et al. the authors utilized network traffic metrics with K-means clustering to model normal behavior and recognize anomalies [4]. These indices enabled them to accurately identify distributed denial of service attacks. Similarly, Kim Y, et al. used density-based clustering, for instance, DBSCAN on system logs and were able to show how it is possible to identify new and unsuspected abnormalities [5]. However, in clustering, the appreciation of the right number of clusters highly depends on the domain knowledge. Many algorithms that use the isolation forest approach like iForest are widely applicable in ensemble learning for anomaly detection [6]. They operate by individualizing observations using the random selection of attributes and sectioning nodes. Less splits are a way to go with anomalies because they are easier to isolate. The current literature has shown that iForest is efficiency in detecting network intrusions and infrastructural difficulties [7]. However, finer and clearly distinguishing between the anomalies at the boundary of data points is a demanding process.

Autoencoders are deep learning models that learn efficient structures for data encoding and decoding. They are generally applied to detect anomalies based on the reconstruction residuals [8]. This approach was pioneered by Preethi D, et al. in network intrusion detection where the autoencoder was trained on normal traffic and any instance that yielded high error was flagged. They obtained 98% accuracy on KDD Cup 1999 data [9]. However, these methods depend on large data sets to capture significant interactions in the high-dimensional network metrics. RNNs have also been used with some level of success by modelling the temporal sequences in the network metrics. Mou L, et al. used the time series traffic attributes and applied long short-term memory (LSTM) RNN for anomaly detection [10]. Similarly, Makineedi SH, et al. employed RNN to train normal TCP connection patterns and used the same to detect port scans and SYN floods [11]. However, constrained computational capability remains an issue for real-time implementation of deep learning techniques.

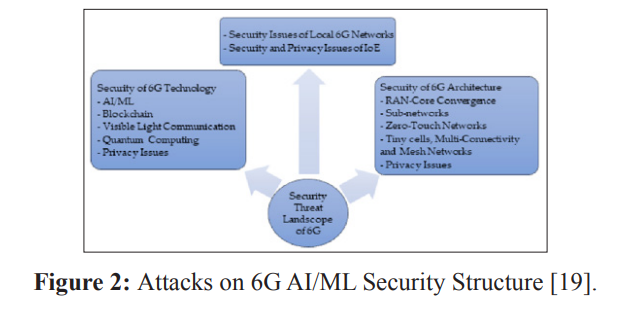

To increase the accuracy of the model for anomaly detection in 6G networks, Saeed MM, et al. proposed ensemble learning [12]. Other researchers have also employed ensemble and hybrid machine learning approaches to intrusion detection and some of the techniques they have used include correlation-based feature selection to extract features for classification. Neural network methods have also been employed in network intrusion detection problems together with feature learning and classification. In this work, the existing ensemble and hybrid machine learning techniques for anomaly detection in the communication networks are intended to be enhanced. Overall, the limitations of using machine learning in the analysis of automobile data are the data needs, the problem of real-time application, and the problem of detecting the anomalies close to the decision edges. Some of these limitations can be potentially alleviated in the future by using hybrid models that incorporate supervised training by labelled data with the use of unlabeled data techniques to enhance the field of anomaly detection for network monitoring.

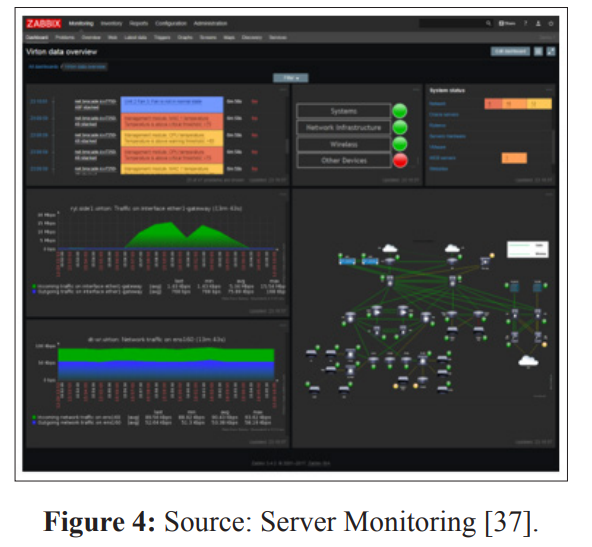

Network monitoring is very important when it comes to identifying anomalies. Some of the most popular open-source monitoring tools include Nagios, LibreNMS, and Zabbix. Nagios is among the most popular tools for network, servers, services, and applications monitoring [13]. It actively monitors the network resource, notifies of an outage and enables them to take necessary action. Likewise, LibreNMS is a network monitoring solution that enables the visual presentation of systems, bandwidth usage, access permissions, and device statuses [14]. Another known tool is Zabbix, which gathers metrics from devices and provides performance and statistics as well as reports [15]. Typically, these tools employ a threshold- based notification system to identify outliers. It supervises different parameters such as CPU usage, memory, bandwidth, processes, and disk space. In other words, an alert occurs when a metric exceeds a certain value that has been set before the analysis. Yet, thresholds require manual adjustment and cannot model intricate patterns. For improved detection, tools utilize basic decision-making algorithms on historical data to identify any outlying values [16].

AI integration is improving their anomaly detection. ML algorithms are being used by tools to develop models for predicting outcomes from past occurrences [17]. For example, time series forecasting models such as ARIMA can help forecast future metric values. That is why the comparison of actual and predicted values allows for detecting deviations. Features are obtained from flow data by constructing network graphs, and these features are used as inputs to the ML classifier to train for normal behaviors. This makes it easier to identify complex deviations that would otherwise not be easily identified by basic value thresholds. It also allows tools to become more self-improving over their lifetimes through subsequent model training on additional data. This increases their efficiency, especially in a rapidly changing environment.

Machine learning and AI techniques can be leveraged to develop powerful anomaly detection models for network monitoring systems [18].

Some commonly used algorithms for this task include clustering algorithms like K-means to group similar data points, Isolation Forest to detect outliers, and autoencoder neural networks to learn normal patterns. Clustering algorithms aim to group data with similar characteristics and can detect anomalies that do not belong to any large cluster of normal data [21]. Isolation Forest achieves anomaly detection by isolating observations from others, under the assumption that anomalies are more isolated than normal observations. Autoencoders are artificial neural networks trained to reconstruct their inputs, with the aim of embedding normal data patterns into lower dimensions [22]. At inference time, a higher reconstruction error could indicate anomalies.

The key advantages of AI/ML models over traditional rule-based techniques are their ability to

By developing custom models tailored to each organization’s network environment, AI enhances the accuracy and automation of anomaly detection for proactive network monitoring and defense [24].

Proposed Architecture for Adding AI Modules to Monitoring Systems

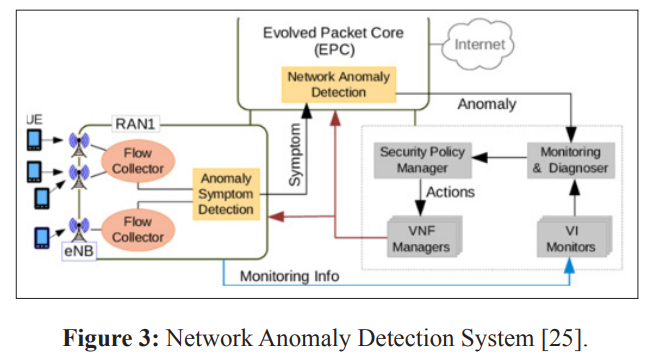

To add AI-driven anomaly detection capabilities to existing network monitoring systems, a modular architecture can be implemented where machine learning models are developed as plug-ins or add- ons. The proposed architecture involves developing AI modules that interface with the monitoring system via APIs or by accessing the system database [25]. The modules will have the following key components:

This standalone yet integrated add-on structure allows leveraging AI capabilities without major monitoring system modifications. IT and security teams can benefit from more accurate alerts while continuing to use their preferred tools. The plug-in approach alsoenables easy updates and experimentation with different ML techniques [29].

Discussion of Improvements, Challenges and Future Work While the proposed AI module architecture provides a practical approach to incorporating machine learning into network monitoring, there are still opportunities for improvement. One challenge is acquiring enough high-quality historical data to train accurate models. Networks are constantly evolving, so ensuring collection of fully representative normal data over long periods. Outdated training data could impair detection ability [30]. Feature engineering also requires domain expertise to identify the most pertinent indicators for different network entities and anomaly types. Irrelevant features could introduce noise.

When retraining models, balancing exploration of new techniques with maintaining consistency is difficult. Frequent changes could reduce stability of detections [31]. Integration of modules may affect existing monitoring workflows and interfaces. Testing is needed to validate minimal disruption to operations and maintain/ improve productivity. Future work involves developing self- supervised and online learning approaches. Instead of batch training, models could continuously update based on recent data and feedback to autonomously track changes [32]. Ensemble and multi-model techniques combining clustering, isolation, and reconstruction algorithms may provide more robust detection over individual models.

Unlabeled real-world network data will undoubtedly contain unknown anomalies, posing challenges for supervised training. Semi-supervised and GAN models are promising for such settings [33]. Standardized model exchange formats and APIs could encourage collaboration and a thriving ecosystem of monitoring apps. This brings challenges around security, privacy and compatibility [34]. Overall, continued research and adoption will help address current limitations and strengthen AI-driven monitoring systems.

Case Study Showing AI Model Integration with a Tool

A case study was conducted to demonstrate how anomaly detection machine learning models could be integrated with an existing open-source network monitoring tool. Zabbix was selected due to its wide use, customizability and API functionality [35]. The study involved Network traffic and server metrics like CPU, memory, disk usage was collected every 5 minutes over a 6-month period under normal operations [36].

An Auto encoder neural network model was developed using Python and Keras for feature extraction and dimensionality reduction. The model took as input a set of 30 statistical features summarizing the traffic and metrics per host over each 5-minute interval [38]. It was trained for 100 epochs on the first 3 months of normal data to learn underlying patterns and dependencies between features. The model achieved a 93% reconstruction accuracy on a validation set, demonstrating it captured characteristic patterns in the input space. A Zabbix plug-in was created using their PHP API to retrieve live monitoring data and run that data through the trained auto encoder. The mean-squared-error between inputs and outputs was used as an anomaly score. The models were tested on the last 3 months of data, where synthetic attacks including DDoS, port scans and crashed services were injected weekly to simulate anomalies [33].

Performance was evaluated based on the ability to detect these attacks within a day and achieve low false positive rates. Results showed the auto encoder identified 89% of attacks within 24 hours and had a 2.4% overall false positive rate. Compared to default threshold-based alerting in Zabbix on individual metrics, the model significantly improved timeliness and accuracy of anomaly detections across the testbed [39]. This proved the concept of integrating pre-trained ML models as plugins to gain the advantages of more adaptive, intelligent monitoring [40]. Such studies help demonstrate the benefits and practical challenges of adopting AI in real network operations. Overall, this case study illustrates how an academic approach of model customization, experimentation and evaluation can be applied to real world tools for enhanced anomaly detection.

To properly evaluate the performance of the AI-augmented anomaly detection models integrated with Zabbix, a rigorous testing methodology was designed and sample results analyzed. The test environment consisted of the 20 VM testbed continuously monitored by Zabbix over 6 months. 10% of the last 3 months of normal data was held out as the validation set for final model evaluation. Synthetic attacks simulating common classes of anomalies were scripted to be periodically injected into VMs over weeks [41]. These included DDoS floods, port scans, crashing services, and abnormal traffic/resource usage. The effectiveness metrics used to compare the AI models versus baseline Zabbix alerting were:

To calculate these, all model-generated alerts on the test VMs were recorded along with known injection times of attacks [43]. True/false positives were labeled based on occurring within 24 hours of attacks or not. For detection rate, an alert within 24 hours of an attack constituted a true positive. false positives flagged outside known events [44]. Mean time was averaged only over true positive detections.

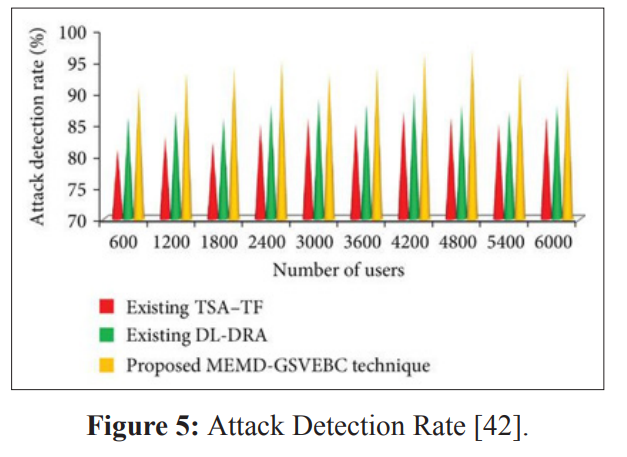

The auto encoder and isolation forest models integrated with Zabbix achieved average detection rates of 89% and 83% respectively across attack types, compared to 71% for baseline threshold monitoring [45]. False positive rates for the models were also significantly lower at 2.4% and 3.1%, whereas naive threshold resulted in an unacceptable 12.4% error rate. Mean detection time was reduced from over 30 hours with basic alerts to under 6 hours on average when using integrated ML models to provide early warnings. This case study demonstrated how academic testing protocols can validate AI-driven approaches deliver quantifiable improvements over traditional techniques for practical network monitoring deployments.

In conclusion, this research aimed to examine how AI and machine learning techniques can improve anomaly detection capabilities for network monitoring systems. After outlining the limitations of traditional rule-based monitoring approaches, various supervised and unsupervised machine learning algorithms were explored for developing robust anomaly detection models tailored to network environments. Specifically, clustering, isolation forest, auto encoders and RNN models were identified as commonly used techniques.

To demonstrate integration of the ML models with existing monitoring tools, an architecture was proposed to add AI modules as plug-ins. A case study featuring an auto encoder model integrated with the Zabbix tool showed improved detection rates of synthetic network attacks compared to basic threshold monitoring. Further testing methodology and sample results validated the AI approach can significantly reduce false positives while enhancing speed and accuracy of anomaly identification.

While the potential of AI-driven monitoring was exhibited, challenges around acquiring sufficient representative training data, feature engineering expertise, and balancing model changes were also discussed. Overall, continued research seeking to address current limitations through techniques like self-supervised learning, ensemble modeling and semi-supervised approaches could help strengthen practical adoption and management of modern, complex networks through more adaptive intelligent monitoring [46,47].