Author(s): Sasi Kanumuri

The growing usage of cloud storage has revolutionized data management by offering unparalleled scalability and accessibility. However, optimizing cloud storage prices remains challenging, sometimes leading to significant unforeseen costs. This article explores a wide range of advanced techniques and practical case studies that enable enterprises to effectively navigate the intricacies of cost optimization across top cloud providers, including Google Cloud Platform (GCP), Microsoft Azure, and Amazon Web Services (AWS). Beyond selecting the most appropriate storage type for individual workloads (block, blob, or file storage), we explore advanced strategies that unlock substantial cost savings. From leveraging contractual negotiations for high-volume storage users to utilizing dedicated data transfer devices for streamlined on-premises migration, this paper equips readers with a diverse arsenal of optimization tools. We explore best practices for data lifecycle management, such as tiered backups and granular retention policies, to optimize beyond merely technical solutions. This way, we ensure that data is held at the most affordable tier depending on its frequency of access and compliance with legal requirements. By consistently analyzing cloud storage consumption and adapting responsive strategies to changing requirements, enterprises may retain optimal performance while ensuring long-term financial sustainability. This paper serves as a valuable resource for organizations seeking to master the intricacies of cloud storage cost optimization. By implementing the advanced techniques and best practices outlined herein, cloud users can confidently unlock significant cost savings and maximize the return on their cloud storage investments.

The evolution of the digital landscape has propelled cloud storage to the forefront of modern data management. Its inherent virtues of unparalleled scalability, ubiquitous accessibility, and remarkable flexibility have empowered organizations to surpass on-premises infrastructure and embrace a dynamic, data-driven future. However, cost optimization is a critical challenge in cloud storage usage. Uncontrolled cloud storage expenses can swiftly escalate, eroding profitability and negatively affecting the economic advantages of the cloud model.

Multiple businesses need help navigating pathways for cloud storage cost optimization. This paper strives to dissect this issue, focussing on cost reduction across the three significant cloud realms -Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Through a comprehensive lens, this paper dissects the nuanced spectrum of cloud storage options, empowering readers to select the optimal storage type for their specific workloads -the high-performance demands of block storage, the cost-effective scalability of blob storage, or the familiar file system access of NFS solutions.

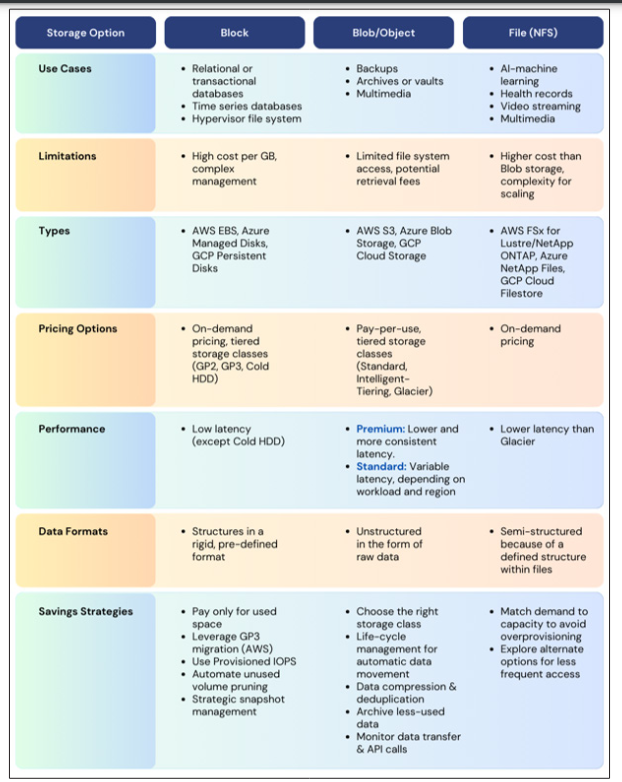

Figure 1 comprehensively compares three primary storage options - block, blob/object, and file. It summarizes the key characteristics of the three categories and highlights the distinct use cases to help users make informed storage decisions.

This storage solution is the bedrock for applications requiring highperformance and persistent data access (e.g., databases, virtual machines). Providers like AWS EBS, Azure Managed Disks, and GCP Persistent Disks offer robust solutions, but navigating cost optimization within this realm demands a strategic approach. This storage solution effectively works on structured data such as relational and transactional databases. While offering low latency and high performance, block storage comes with a higher cost per gigabyte and requires complex management due to its granular block-level data access.

This storage solution effectively deals with the ever-growing deluge of unstructured data that demand cost-effective storage solutions (e.g., backups, archives, and media files). Object storage offers unparalleled scalability and affordability. However, mastering cost optimization within this expansive realm requiresa strategic approach due to its limitations in traditional file system access and retrieval fees. Examples include AWS S3, Azure Blob Storage, and GCP Cloud Storage.

Network File System is a reliable protocol offered by services like Amazon EFS, FSx for Lustre, NetApp ONTAP, Azure NetApp Files, and GCP Cloud Filestore. This network-attached storage solution provides a shared file system, allowing multiple users to collaborate, access, and modify files. However, while the interface may be familiar, cost optimization within this realm demands a fresh perspective. Furthermore, scaling poses challenges when accommodating massive datasets.

Figure 1: Comparison Chart of the Three Primary Cloud Storage Options

Selecting the optimal cloud storage type for your specific workload transcends mere technical configuration. It represents a strategic decision with profound ramifications for cost optimization, performance efficiency, and overall operational effectiveness. Embracing a profound understanding of the three primary cloud storage types -block, blob/object, and file (NFS) -empowers you to make informed choices that unlock many benefits. You can optimize your budgetary allocation by paying solely for the features and performance your workload demands. Your applications can experience unparalleled acceleration, seamlessly supported by storage infrastructure tailored to their specific requirements. Operational streamlining becomes a reality, minimizing management complexities and allowing you to prioritize where it truly matters - your data and applications.

This journey toward unlocking the optimal cloud storage solution demands a deep dive into each type. Remember, the perfect choice resembles a precisely positioned puzzle piece, uniquely tailored to your needs and seamlessly integrated into your cloud ecosystem. Embrace the exploration and discover the path to a cost-efficient, performant, and streamlined cloud experience. Here's a breakdown of the three main types:

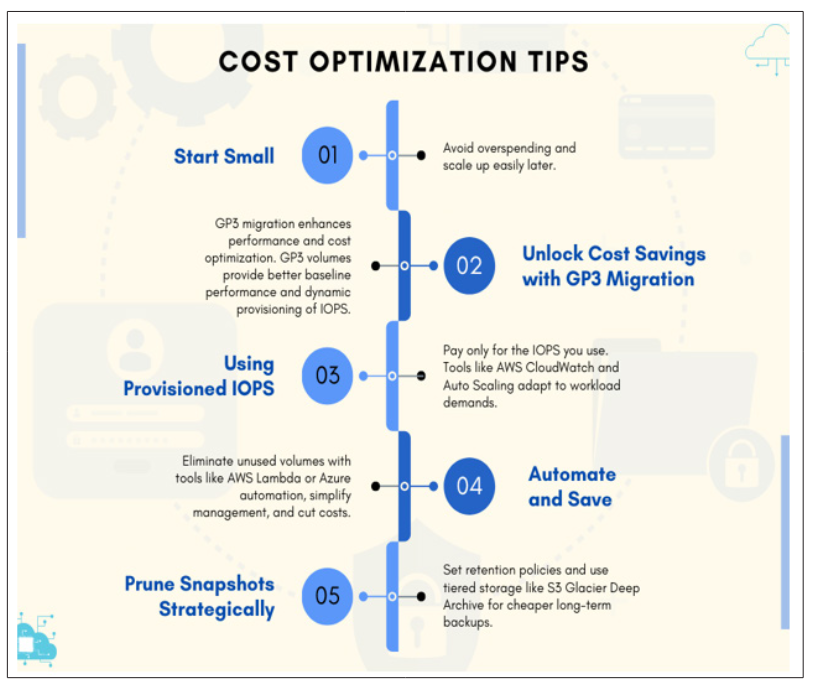

Start Small: When optimizing costs in block storage, the mantra is "Start small, scale fast." Don't over-provision at the outset -cloud environments offer unparalleled flexibility. Unlike traditional on-premises storage, where scaling up usually meant painful downtime and hardware upgrades, in the cloud, adding disk space is often a matter of clicks or API calls. You can seamlessly adjust your capacity to meet real-world demand, ensuring you only pay for what you use. However, remember that downsizing in block storage can be more challenging. Once resources are allocated to running applications, removing them can disrupt operations. Therefore, accurate workload estimations and careful initial provisioning are crucial. By leveraging monitoring tools and historical data analysis, you can make informed decisions about your starting capacity and avoid the hassle of potential downsizing later.

(AWS): Migrate GP2 volumes to GP3 for improved performance and lower cost. This migration offers considerable advantages in terms of both performance and cost optimization.

GP3 volumes significantly enhance baseline performance, with up to 3,000 Input/Output Operations Per Second (IOPS) per volume compared to GP2's limitations. Furthermore, GP3 offers flexible scaling capabilities, allowing for dynamic provisioning of IOPS and throughput (up to 16,000 IOPS and 1,000 MiB/s) as workload demands necessitate.

This scalability, coupled with a lower cost per gigabyte compared to GP2, presents a compelling opportunity for the cost-effective optimization of storage resources. The migration process is facilitated by readily available AWS tools and comprehensive documentation, minimizing potential disruptions and downtime. However, careful planning and analysis are crucial for a successful migration. Thoroughly assessing workload performance requirements and accurately sizing GP3 volumes are essential pre-migration steps

Additionally, leveraging AWS performance testing tools can ensure a seamless application transition. Post-migration optimization remains critical for maximizing cost savings and efficiency. Utilizing Amazon CloudWatch for continuous monitoring and performance optimization of GP3 volumes allows organizations to fine-tune their storage resources and maintain optimal costeffectiveness.

Use Provisioned IOPS When Needed: Migrating to GP3 might be tempting for its speed, but remember, cost efficiency thrives on precision. Consider provisioned IOPS as a powerful tool for optimizing performance without breaking the bank. By analyzing your application's IOPS requirements, you can tailor your storage to deliver the exact performance needed, saving on unnecessary overprovisioning. Similarly, provisioning IOPS allows you to match your storage capabilities to your workload's real demands, ensuring responsiveness without excess horsepower. This can be done by analyzing your application's historical IOPS usage to understand its needs, setting a custom IOPS limit instead of relying on predefined tiers, and paying only for the IOPS you utilize, avoiding wasted resources and optimizing your cloud budget.

Remember, flexibility is key. Integrate automation scripts with continuous monitoring tools like AWS CloudWatch to dynamically adjust your provisioned IOPS based on real-time usage. This intelligent approach guarantees full performance benefits while keeping your budget on track.

Automate Unused Volume Pruning: Unused volumes in block storage quietly siphon off cloud budgets. Manually reclaiming them is tedious and error-prone, potentially leaving volumes forgotten and accumulating charges. Automation to the rescue! Tools like AWS Lambda and Azure Automation monitor your volumes, detaching inactive ones for a set period. This saves money, simplifies management, boosts resource utilization, and ensures your cloud storage serves a clear purpose, not hidden costs.

Strategic Snapshot Management: Snapshots, while essential for data protection, can snowball into a significant cost burden if left unchecked. Retaining obsolete snapshots for extended periods inflates unwanted storage expenses, diverting resources from strategic initiatives.

To tame this cost flow, implement automated snapshot pruning based on predefined retention policies, considering compliance. This is a critical cost-optimization strategy and a responsible data governance practice. Organizations can establish tiered retention policies based on data criticality and regulatory requirements to ensure robust protection. Frequently accessed data may warrant shorter retention periods, while historical records require longer archiving timeframes. Tools like AWS Lifecycle Manager automate snapshot deletion according to these predefined periods, eliminating the risk of human error and ensuring proactive cost management.

Additionally, exploring tiered snapshot storage options can further optimize costs. Moving older snapshots to cheaper storage classes like AWS S3 Glacier Deep Archive provides secure long-term retention at a fraction of the cost compared to standard block storage. Organizations can balance data protection and financial stewardship by adopting a multi-tiered approach to snapshot management. Figure 2 provides multiple such cost optimization tips.

Figure 2: Cost Customization Strategies for Block Storage

Expanding on a Popular Collaboration Platform Provider’s (Company A) GP3 Migration: A Deeper Dive into Cost Optimization Success Company A successfully migrated all GP2 volumes to GP3, unlocking $600,000+ in savings. Beyond the headline figure of $600,000+, annual savings lies a detailed narrative of strategic planning, meticulous execution, and ongoing optimization that can serve as a roadmap for other organizations. Let's delve deeper into the critical factors behind the impressive results achieved through this strategic GP3 migration:

Pre-Migration Analysis: Before diving into the migration itself, Company A, a prominent player in the communication sector, meticulously planned its transition through a thorough workload assessment and cost-benefit calculations. They carefully analyzed the IOPS and throughput demands of each application residing on GP2 volumes, mapping them to the most fitting GP3 tier. This indepth analysis laid the groundwork for robust cost projections. They factored in the existing expenses for GP2 storage, the anticipated savings from GP3's lower per-gigabyte price, and the expected performance boost, resulting in a clear estimation of the return on investment this strategic move would yield. By meticulously preparing the terrain, Slack ensured their migration journey would be as smooth and cost-effective as possible.

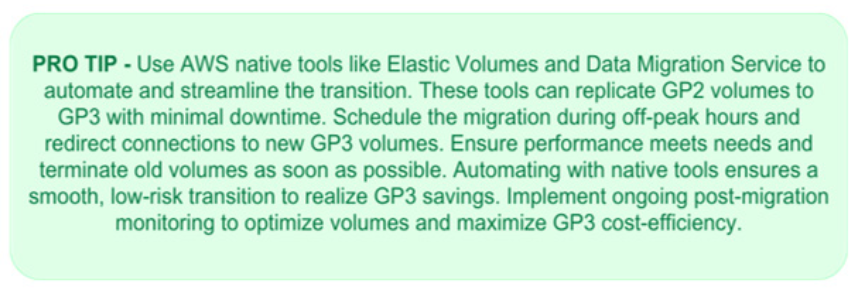

Seamless Migration Execution: Company A understood that minimizing disruption was key to a successful migration. They opted for a phased approach, tackling smaller workloads first. This allowed them to test and refine the process thoroughly, ironing out any wrinkles before expanding to their entire infrastructure. Automation played a crucial role in this seamless execution. Company A entrusted AWS native tools like Elastic Volumes and Data Migration Service to handle data replication and volume updates, reducing the need for manual intervention, minimizing errors, and expediting the entire migration process. This strategic approach ensured a smooth transition with minimal disruption to their platform and users.

Post-Migration Optimization: Company A's commitment to optimization didn't end with the migration itself. They embraced continuous monitoring with tools like Amazon CloudWatch, vigilantly scrutinizing their GP3 volumes for hidden cost inefficiencies. This proactive approach allowed them to identify and eliminate unused resources that might manifest only after the actual migration when workload dynamics play out in the new environment, adjust IOPS allocation dynamically based on evolving demands, and scale volumes to match actual workload needs, squeezing every penny of value out of their storage investment. Furthermore, they expanded automation beyond initial data migration, entrusting tasks like snapshot rotation and volume pruning to smart tools. This minimized manual intervention and potential errors and freed their IT resources for more strategic endeavors, ensuring long-term efficiency and cost control within their GP3 environment.

By emulating Company A's comprehensive approach, other organizations can replicate their success and unlock significant cost savings from strategic block storage optimization using GP3.

Choosing the Right Storage Class: Selecting the optimal storage class for each object lies at the heart of S3 cost optimization. S3 offers diverse options, each catering to specific access patterns and durability requirements. Understanding these nuances is key:

Life-Cycle Policies - Automate for Efficiency: S3's life-cycle management capabilities empower you to define rules for automatic data movement between storage classes or expiration based on access patterns and retention policies. This proactive approach ensures data remains readily available while minimizing unnecessary storage costs.

Data Compression and Deduplication: Implementing data compression and deduplication techniques shrinks your data footprint, translating directly to cost savings. With smaller data sizes, you reduce both storage charges and data transfer expenses.

Archive Less-Used Data, Embrace Cold Storage: Identify data accessed infrequently and leverage colder storage options like S3 Glacier/Deep Archive. These classes offer significant cost reductions while ensuring secure long-term data preservation. Regularly reviewing access patterns and migrating eligible data becomes a continuous optimization exercise.

Monitor Data Transfer and API Calls: Data transfer can significantly impact your overall S3 bill. Tools like Amazon CloudWatch and AWS Cost Explorer enable you to analyze usage patterns, pinpoint inefficiencies, and optimize network setups. Consider AWS Direct Connect or AWS DataSync for cost-effective data transfer solutions.

Financially Beneficial: From a financial standpoint, Amazon S3 provides a pay-as-you-go pricing scheme. By utilizing S3's storage classes, such as Standard, Intelligent-Tiering, and Glacier, according to the frequency of access and data retrieval requirements.

Company B is a design platform that caters to millions of users and massive amounts of user-generated content.

Problem: One of the primary challenges Company B faced was controlling and administering the ever-growing volume of data on Amazon S3 while keeping costs under control. Company B aspires to control the related storage costs without sacrificing data security or access since they are rising sharply

Solutions: Company B used S3 Lifecycle policies and the S3 Storage Class Analysis tool to understand how frequently different data types were accessed. Then, they utilized optimal storage classes by transitioning infrequently accessed user content from S3 Standard-IA to the new S3 Glacier Instant Retrieval, achieving significant cost savings while maintaining fast retrieval times. Company B prioritized buckets with larger average object sizes to optimize the cost-benefit of migration, as these reached the break-even point faster. They also automated the migration process by leveraging lifecycle policies for seamless and efficient data movement between storage classes

Results: Company B saved $300,000 per month ($3.6 million annually) on S3 storage costs. With minimal manual effort, they could migrate 80 billion objects in just two days. It became possible to efficiently store over 230 petabytes of data while maintaining high availability and data security

Company B's success story underscores the importance of proactive data management and strategic utilization of different storage classes in cloud environments. By following their example, companies of all sizes can achieve significant cost savings and optimize their S3 usage for maximum efficiency

Leveraging Cost-effective Storage Tiers: Cloud storage services often offer multiple storage tiers with varying performance and cost characteristics. Organizations can leverage lower-cost storage tiers for infrequently accessed data by identifying data with distinct access patterns. For instance, Amazon EFS Infrequent Access (IA) with Lifecycle Management automatically migrates less frequently accessed data to a significantly lower storage cost tier while maintaining immediate accessibility within the same file system namespace. This approach can lead to substantial cost savings without compromising data availability

Exploring Alternative Options: While NFS offers familiar access, it’s crucial to remember that it isn’t always the most costeffective solution. For data accessed infrequently or primarily used for backups and archives, consider the suitability of blob storage options like Amazon S3 or Azure Blob Storage. These services often offer significantly lower storage costs than NFS, potentially leading to substantial savings for specific use cases.

Beyond the basics, these advanced techniques can further optimize your cloud storage costs:

Leverage Contract and Rate Negotiations: Big data players storing petabytes of information hold significant bargaining power. By negotiating contracts with cloud providers, you can unlock:

• Volume Discounts: Secure significant reductions in pergigabyte costs based on your projected storage needs.

• Custom Rate Cards: Negotiate unique pricing structures tailored to your specific access patterns and data types.

These negotiations require thorough data usage analysis and an understanding of market trends. However, the potential savings for massive storage requirements can be substantial.

Compressing redundant data can dramatically shrink storage footprints, reducing storage costs and network transfer charges. Consider:

Remember, compression and deduplication impact performance; weigh data accessibility needs against potential savings.

Consider data volume, security needs, and network limitations when choosing between network transfers and physical device migration.

Backup strategies significantly impact storage requirements and costs. Implement techniques like:

Optimizing backup strategies ensures efficient storage utilization, avoids unnecessary retention costs, and maintains essential data protection.

Predictable workloads present an opportunity for significant cost savings through Azure’s storage reservations. By committing to a specific storage capacity for a defined period, you unlock substantial discounts compared to on-demand pricing. Carefully assess your predictable storage needs and consider leveraging the following solutions to secure long-term cost benefits:

These policies dictate data retention periods based on regulatory requirements and business needs, preventing the unnecessary accumulation of outdated information. This is carried out through:

Embracing proactive retention policies and smart tiering empowers you to balance readily available essential data and efficient disposal of obsolete information, fostering optimization in cost, data governance, and overall cloud storage management.

Conceptualizing your cloud storage as a dynamic ecosystem rather than a static repository unlocks significant optimization opportunities. This is where data archival and lifecycle management come into play, automatically organizing and optimizing your data based on its usage and value. This dynamic movement ensures:

Tools like AWS CloudWatch and Azure Storage Insights provide granular data usage insights, empowering you to define customized lifecycle rules based on access patterns and data age. This datadriven approach ensures your storage resources align with your needs, maximizing performance and cost savings.

The ascent of cloud storage has fundamentally reshaped data management, offering unparalleled reach and scalability. Yet, maximizing cost efficiency within this seemingly boundless realm remains a complex labyrinth. This paper has served as a compass, navigating the intricate pathways of optimal cloud storage utilization across leading providers: AWS, Azure, and GCP.

Beyond selecting the most appropriate storage type (block, blob, or file), we have delved into advanced techniques that unlock substantial cost savings. From leveraging contractual negotiations for high-volume users to employing dedicated data transfer devices for streamlined on-premises migration, this paper has equipped you with a diverse arsenal of optimization tools.

However, cost optimization transcends mere technical solutions. We have emphasized the importance of best practices in data lifecycle management, including tiered backups and granular retention policies, ensuring data resides in the most cost-effective tier based on access frequency and legal requirements. Continuous analysis of cloud storage utilization and adaptation to evolving needs is fundamental to maintaining optimal performance while ensuring long-term financial sustainability.

This paper serves as a valuable roadmap for organizations seeking to master the complexities of cloud storage cost optimization. By implementing the advanced techniques and best practices outlined herein, cloud users can confidently unlock significant cost savings and maximize the return on their cloud storage investments. Remember, the journey toward cost optimization is continuous, demanding active monitoring, strategic adaptation, and unwavering commitment to efficiency. By embracing these principles, organizations can navigate the intricate maze of cloud storage costs and emerge victorious, securing a future of sustainable growth and data management excellence [1-5].