Author(s): Vijay Kartik Sikha, Dayakar Siramgari and Laxminarayana Korada

Generative AI technologies are reshaping multiple sectors by enhancing how tasks are executed, and information is processed. With advancements from companies like OpenAI, Microsoft, and Google, these AI agents have become pivotal in automating workflows, generating text that resembles human writing, and offering actionable insights. Central to harnessing their potential is mastering prompt engineering-the art of designing effective queries to optimize AI responses. This article delves into the burgeoning field of generative AI, emphasizing the significance of prompt engineering in enhancing the interaction with these sophisticated tools. It provides an overview of leading AI tools like ChatGPT, Microsoft Copilot, and Google Bard, explores the technical underpinnings of these models, and addresses key aspects of prompt engineering including linguistic nuances, iterative refinement, and bias mitigation. The article also examines practical strategies for mastering prompt engineering, discusses security concerns such as prompt injection attacks and data privacy, and highlights future trends in AI technology. Through case studies and expert insights, the article underscores the critical role of prompt engineering in maximizing the effectiveness and ethical use of generative AI systems.

In recent years, the field of artificial intelligence (AI) has undergone remarkable advancements, particularly in Natural Language Processing (NLP) and generative models, fundamentally altering human-computer interaction by enabling AI agents to comprehend and generate human-like text based on user-provided prompts. This paradigm shift spans diverse domains, including creative writing, content generation, scientific research, and automated customer service, highlighting the broad-reaching implications of AI capabilities [1]. At the heart of this transformative capability lies prompt engineering a critical practice involving the crafting of precise, context-aware instructions or queries to guide AI models towards desired outputs [2]. Mastery of prompt engineering empowers users to optimize interactions with AI agents, whether for generating narratives, solving scientific problems, or automating tasks. Generative AI agents like OpenAI's GPT series, built on transformer architectures, have evolved from basic predictive models to sophisticated systems capable of coherent paragraph generation, multilingual translation, and complex data summarization through techniques such as self-supervised learning. However, challenges persist in prompt engineering, particularly concerning bias mitigation in AI-generated content and addressing model limitations in factual accuracy and context comprehension, necessitating refined prompt strategies and post-processing methodologies [1]. The application domains of prompt engineering are expansive, encompassing creative content creation, scientific hypothesis generation, and enhanced customer service interactions, illustrating its transformative potential across industries [3]. Looking forward, ongoing research aims to enhance AI's adaptability and interpretability through advancements in zero-shot and few-shot learning, alongside efforts to improve model transparency and ethical deployment through interdisciplinary collaborations.

Generative AI agents play a crucial role in various applications, each offering distinct capabilities. For instance, OpenAI’s GPT series, including GPT-4, represents a significant leap in natural language processing. Utilizing a transformer-based architecture, GPT-4 excels in generating coherent and contextually relevant text, supporting applications ranging from conversational agents to creative writing and data analysis.

Similarly, Microsoft Copilot, integrated into Microsoft 365 applications, enhances productivity by providing context-aware suggestions and automating routine tasks. It streamlines workflows by assisting with document drafting, presentation creation, and data analysis within the Microsoft ecosystem.

Google Bard, on the other hand, focuses on improving conversational AI and information retrieval. Leveraging Google’s extensive search capabilities, Bard aims to deliver accurate responses to user queries, thereby enhancing natural language interactions across various conversational applications.

To effectively engage with these AI systems and optimize their utility, it is essential to understand how AI models interpret and respond to prompts. This involves grasping the technical workings of AI models, such as their architecture and tokenization processes, as well as recognizing their inherent limitations, including biases, knowledge cutoffs, and gaps in expertise. Mastery of these aspects enables users to craft prompts that produce accurate, relevant, and insightful responses, thus enhancing the overall effectiveness of AI interactions.

To interact effectively with AI models, it is essential to understand their technical underpinnings. AI models, especially those built on advanced machine learning techniques, operate through intricate architectures designed to process and generate text. One prominent example is the transformer architecture, used in models like GPT (Generative Pre-trained Transformer).

Transformers use a mechanism known as self-attention or scaled dot-product attention. This mechanism allows the model to weigh the significance of different words in a sentence relative to each other. For instance, in the sentence "The cat sat on the mat," the model needs to determine how "cat" relates to "sat" and "mat." Self-attention mechanisms facilitate this by creating contextual embeddings for each token, which helps in generating more contextually relevant responses.

Understanding this mechanism helps in crafting prompts that align with the model’s capabilities. For instance, including clear contextual information in prompts enables the model to generate responses that are coherent and contextually appropriate.

AI models like GPT-4 process text in chunks called tokens. Tokens can be words, parts of words, or even punctuation marks. The model’s ability to handle prompts depends on its tokenization process, which breaks down input text into manageable units. Effective prompt engineering involves structuring input text in a way that fits within the model’s token constraints and ensures that the most relevant tokens are included. For example, providing a concise and specific prompt avoids ambiguity and helps the model generate a focused response.

AI models are trained on large datasets that encompass a wide range of topics and linguistic patterns. The training process involves adjusting model parameters to minimize the difference between predicted and actual outputs based on this data. Understanding the nature of the training data, its diversity, scope, and any potential biases can aid in formulating the prompts. For instance, a model trained predominantly on academic texts might excel at generating formal responses but might struggle with colloquial language or highly specialized jargon.

Equally important as understanding technical mechanics is recognizing the limitations of AI models. These limitations can significantly impact how models interpret prompts and generate responses.

AI models generate responses based on patterns learned from data rather than genuine comprehension or experience. This means they can produce responses that are syntactically correct but lack real-world relevance or nuance. For instance, if a prompt is vague or lacks sufficient detail, the model might generate a plausible sounding but incorrect response. Effective prompting involves providing clear and specific information to guide the model towards generating more accurate outputs.

Most AI models have a cutoff of knowledge, which signifies that they are trained on data up to a certain point in time and do not have information about events or developments that occurred afterward. For example, a model trained with data up to 2021 would not be aware of events or advancements in 2023. When crafting prompts, it’s important to be aware of this limitation and focus on topics or information that falls within the model’s training data period. Asking about historical or general knowledge topics can yield more reliable results than inquiring about recent events.

AI models can exhibit biases based on the data they were trained on. These biases can influence the responses generated, sometimes reflecting or amplifying existing stereotypes or inaccuracies present in the training data. Understanding these potential biases is crucial for crafting prompts that mitigate their impact. For instance, framing prompts in a way that encourages balanced perspectives or avoids leading questions can help in obtaining more objective responses.

AI models may also struggle with highly specialized or niche topics if they were not extensively represented in their training data. Crafting prompts for such topics might require providing additional context or framing questions in a way that compensates for the model’s potential lack of expertise in the area.

The proliferation of generative AI agents highlights the importance of understanding how these systems process information and generate output. At the core of these processes is the use of sophisticated machine learning architectures, particularly transformer models, which underpin many leading generative AI systems like OpenAI’s GPT series and Google’s Bard.

Generative AI models utilize advanced tokenization and embedding techniques to handle and interpret input data. Tokenization breaks down text into manageable pieces, or tokens, which are then converted into numerical representations known as embeddings. These embeddings allow the AI to process and understand the input text in a form that is suitable for analysis and generation. Transformer models leverage self-attention mechanisms to assess the importance of different tokens relative to each other, ensuring that the generated output maintains coherence and relevance to the input prompt.

The ability of generative AI agents to produce coherent and contextually relevant text is a result of their extensive training on diverse datasets. These models learn patterns, relationships, and contextual information from vast amounts of data, which they use to generate responses. The output is generated by predicting the most probable next token in a sequence, based on the input provided. This process allows for the creation of text that is contextually appropriate and aligned with user intent.

As these models become more prevalent, managing issues such as bias and ambiguity in output generation becomes increasingly important. Biases inherent in training data can affect the fairness and accuracy of generated responses. Additionally, models may struggle with ambiguous prompts, which can lead to less relevant or unclear outputs. Addressing these challenges involves refining prompt engineering and continuously evaluating and adjusting models to ensure optimal performance across various applications. This approach is essential for leveraging the full potential of generative AI agents and achieving optimal outcomes in their diverse applications.

Prompt engineering involves designing and refining input queries or instructions to effectively interact with AI models, especially those generating natural language outputs. This practice focuses on crafting prompts that clearly communicate the user’s intent and provide adequate context to guide the AI in producing accurate and relevant responses. Successful prompt engineering demands a thorough understanding of the AI’s processing capabilities and limitations, allowing users to frame queries that align with the model’s strengths.

The significance of prompt engineering is evident in its substantial impact on the quality and utility of AI-generated outputs. Well- crafted prompts improve the AI’s ability to comprehend and respond effectively, resulting in more precise and contextually relevant information. This skill is vital across various applications, including content creation, data analysis, customer support, and conversational agents. Mastering prompt engineering enhances interactions with AI systems, ensuring that the responses are actionable and valuable. Furthermore, it helps address challenges such as ambiguity and bias, contributing to more reliable and equitable outputs. With AI technologies becoming more sophisticated, prompt engineering has emerged as a crucial skill for maximizing the effectiveness of these tools [4]. Thus, prompt engineering is essential for leveraging the full potential of generative AI technologies.

In the field of artificial intelligence, particularly with systems involving NLP, understanding linguistic nuances is vital for crafting effective prompts. Two fundamental aspects of this process are how prompts are constructed to align with NLP capabilities and managing context and ambiguity to ensure accurate and relevant AI responses.

NLP enables AI models to comprehend and generate human language. The effectiveness of these models—such as OpenAI’s GPT-4 or Google's BERT—depends significantly on how well prompts align with their processing mechanisms. NLP models utilize sophisticated algorithms to interpret text, including tokenization, where text is broken into manageable pieces, and embeddings, which transform these pieces into numerical representations [5]. For AI to produce accurate responses, prompts must be crafted with clarity and specificity. Research indicates that well-defined prompts improve model performance by minimizing misunderstanding and ensuring that the AI generates contextually appropriate responses. For example, a prompt like "Explain the significance of CRISPR technology in genetic research" provides clear direction and context, facilitating a more targeted and relevant output compared to a vague prompt such as "Tell me about CRISPR technology."

Effective prompt engineering also involves managing context and ambiguity. AI models rely on the context provided in prompts to generate relevant responses. A study by Radford et al. highlights that prompts with insufficient context often leads to less accurate or irrelevant outputs because the AI struggles to infer the intended meaning [2]. Clear and detailed prompts help in reducing ambiguity and guiding the AI to focus on the user’s specific needs. For instance, specifying "Describe the impact of renewable energy on urban infrastructure" is more effective than simply asking "Discuss energy impacts," as it narrows down the scope and provides necessary context. This approach ensures that the generated responses are directly aligned with user expectations, reducing the potential for misinterpretation.

Research underscores the importance of crafting prompts that are both precise and contextually informative. Effective prompt engineering significantly enhances the quality of AI-generated responses by aligning the input with the model's strengths and capabilities. Furthermore, addressing ambiguity and providing adequate context are crucial for avoiding misinterpretation and achieving optimal outcomes.

Iterative Refinement is a crucial process in optimizing interactions with AI systems, particularly in crafting effective prompts. This involves two key strategies: trial and error and utilizing a feedback loop.

This approach emphasizes the iterative nature of prompt engineering. In practice, it means continuously testing different prompts to see how well they perform in generating the desired output. For example, if an AI model produces irrelevant or unsatisfactory responses to an initial prompt, users can adjust the wording, add more context, or refine their questions based on the model's output. This iterative process helps in identifying which prompt formulations yield the most accurate and relevant responses. By experimenting with various approaches and learning from each attempt, users gradually enhance the effectiveness of their prompts. Research shows that this method is effective in fine- tuning AI interactions, as it allows for the gradual improvement of prompt quality based on observed performance.

Integrating the AI's responses into a feedback loop is another critical aspect of iterative refinement. This involves analyzing the outputs generated by AI and using this information to further refine and improve the prompts. For instance, if the AI’s response reveals that a prompt is too broad or ambiguous, users can adjust the prompt to address these issues. This ongoing cycle of feedback and refinement ensures that prompts are continuously improved based on real-world performance, leading to more precise and effective interactions. This approach is supported by studies demonstrating that feedback-driven prompt adjustments lead to enhanced AI accuracy and relevance.

Iterative refinement through trial and error and feedback loops is essential for optimizing prompt engineering. By continuously testing and refining prompts and leveraging AI responses to make informed adjustments, users can achieve more accurate and relevant outputs, thereby enhancing the overall effectiveness of their interactions with AI systems.

Understanding Bias: AI models are trained on large datasets that may include historical and societal biases. Consequently, poorly crafted prompts can result in biased or unfair outputs. For example, prompts that inadvertently reflect gender stereotypes can lead to AI-generated responses that reinforce those stereotypes [6].

Designing Inclusive Prompts: To mitigate bias, prompts should be designed to be inclusive and neutral. This involves:

By thoughtfully designing prompts and continuously evaluating their impact, it is possible to develop AI systems that are more equitable and less biased, thus supporting both accuracy and ethical considerations in AI applications.

Several methodologies exist in prompt engineering, focusing on structured approaches to enhance the performance of large language models (LLMs). Foundational techniques such as role- prompting, one-shot, and few-shot prompting, are essential for guiding AI responses effectively. These methods provide a basis for more advanced strategies, ensuring that the AI can generate accurate and contextually relevant outputs. The understanding of strengths and limitations of these techniques is important to optimize their application in different scenarios.

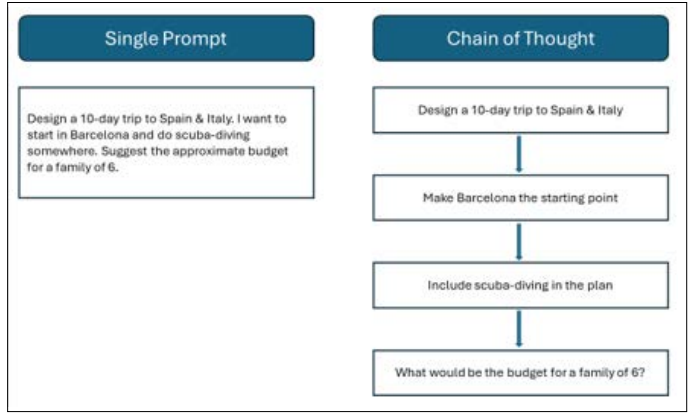

Large Language Models (LLMs) excel at identifying patterns within vast datasets, yet they frequently encounter difficulties with complex reasoning tasks, often arriving at correct answers by chance rather than through a genuine understanding of the underlying logic. The Chain of Thought (CoT) prompting technique addresses this issue by methodically guiding the LLM through the reasoning process in a step-by-step manner. This approach involves breaking down problems into a sequence of intermediate steps, justifications, or pieces of evidence, thereby facilitating a logical progression towards the final output. By providing structured examples that demonstrate how to decompose and logically solve problems, CoT prompting enhances the model’s ability to perform intricate reasoning tasks effectively

Figure 1: Example: Chain of Thought

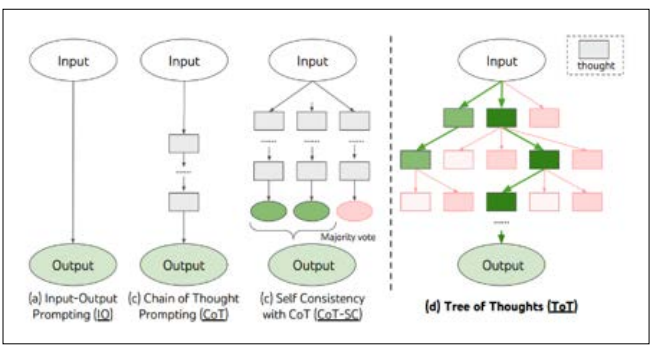

The integration of external knowledge through plugins helps reduce machine hallucinations and improve response accuracy. This integration is crucial for enhancing the reliability of AI outputs. For complex tasks that need exploration or strategic planning, traditional prompting techniques are often inadequate. The Tree of Thoughts (ToT) framework builds on chain-of-thought prompting and promotes exploring different ideas that act as intermediate steps for solving problems with language models. (Nextra, n.d.)

Figure 2: Tree of Thoughts Framework

Mastering prompt engineering involves several key steps:

Several resources are available to aid in learning prompt engineering:

Organizations can develop effective learning repositories and business group-specific prompt libraries by following these strategies:

Creating Learning Repositories

Creating Business Group-Specific Prompt Libraries

By implementing these strategies, organizations can create comprehensive learning repositories and business group-specific prompt libraries that enhance employees' skills in prompt engineering and ensure more effective and fair AI outputs.

The approach to prompt engineering varies depending on the user persona:

In the evolving landscape of artificial intelligence, the design and refinement of prompts, specific instructions given to AI models are crucial for ensuring reliable, cost-effective, and actionable outcomes. Inefficient prompt engineering can lead to a cascade of issues, including unreliable outputs, workflow disruptions, increased costs, and poor decision-making. AI models, especially those based on natural language processing, depend on the clarity and precision of prompts to generate accurate responses. When prompts are vague or poorly designed, the AI may produce irrelevant or incorrect information, undermining its utility and eroding trust in technology. This inefficiency can also block workflows by introducing delays and bottlenecks, particularly in time-sensitive industries like customer support or data analysis, where misaligned prompts can necessitate additional clarifications and corrections, thereby consuming valuable resources and impacting productivity. Financially, the repercussions are significant; repeated interactions to refine or correct outputs lead to higher computational and labor costs, straining budgets and reducing the return on investment in AI technologies. Moreover, the most critical consequence is poor decision-making: flawed prompts can mislead decision-makers, resulting in erroneous conclusions and suboptimal strategies that jeopardize an organization’s success and reputation. Hence, investing in effective prompt design is essential to harness the full potential of AI and achieve optimal results.

In the realm of artificial intelligence, addressing security concerns is critical to maintaining the integrity and reliability of AI systems. Two major concerns in this area are prompt injection attacks and data privacy.

Prompt injection attacks represent a significant threat where malicious actors exploit vulnerabilities in AI systems by crafting deceptive prompts designed to manipulate the AI’s logic and behavior. Such attacks can lead to the generation of biased, harmful, or misleading responses. For instance, an attacker might input prompts that trick the AI into providing sensitive or incorrect information. Research emphasizes the importance of developing robust defenses against these threats, such as implementing input validation mechanisms and monitoring for unusual prompt patterns [12]. Techniques such as anomaly detection and filtering mechanisms can help identify and block malicious inputs, thereby protecting the AI's integrity and ensuring it functions as intended.

Ensuring data privacy is another crucial concern in AI interactions. Prompts and responses may contain sensitive information that, if not properly protected, could lead to data breaches or privacy violations. Effective measures include encrypting data and employing anonymization techniques to safeguard personal information. Additionally, AI systems should be designed to minimize the retention of sensitive data and comply with data protection regulations like the General Data Protection Regulation (GDPR). Ensuring that prompts do not inadvertently request or expose confidential information is essential for maintaining privacy [13].

Generative AI represents a critical advancement in natural language processing, with extensive implications across various facets of life, including education. This technology excels in applications such as chatbots, virtual assistants, language translation, and content generation. Mastery over human language allows generative AI to uncover patterns that might elude human perception [14].

The efficacy of AI language models depends not only on their underlying algorithms and training data but also on the quality of the prompts they receive. Through meticulous prompt engineering, users can enhance the AI's ability to provide precise, contextually relevant information [15]. In some cases, users can even manipulate AI outputs beyond standard constraints, a process known as jailbreaking or reverse engineering.

Metaphorically, if generative AI is Aladdin's magic lamp, one’s prompts are the wishes engineered to let the genie out. Effective prompt engineering involves:

By employing these strategies, users can optimize prompt engineering to elicit meaningful, accurate responses from AI models, aligning with their specific objectives and requirements. This approach can encourage critical thinking, creativity, and deeper understanding, particularly in educational contexts [17].

Microsoft Copilot has significantly improved productivity for office workers by integrating AI-powered suggestions into Microsoft 365 applications. Copilot aids users in drafting documents, creating presentations, and analyzing data. Effective prompt engineering is crucial for ensuring that Copilot’s suggestions align with users' goals and needs. Mastery of prompt crafting enables users to leverage Copilot’s capabilities to streamline workflows and enhance efficiency [16].

OpenAI’s GPT-4 has been utilized in various content generation applications, including creative writing, technical documentation, and marketing copy. Crafting specific and contextually rich prompts is essential for generating high-quality content. Users who understand the nuances of prompt engineering can produce more relevant and engaging content, highlighting the impact of effective prompt crafting on the quality of AI-generated outputs [10,11].

Google Bard utilizes its extensive search capabilities to deliver accurate and relevant responses to user queries. Effective prompt crafting is crucial for retrieving precise information and enhancing conversational interactions. Users who excel in prompt engineering can obtain more accurate answers and insights, emphasizing the importance of prompt quality in leveraging Bard’s functionalities [17,18].

According to a report by Grand View Research, Inc, the global prompt engineering market was valued at USD 222.1 million in 2023 and is projected to grow at a compound annual growth rate (CAGR) of 32.8% from 2024 to 2030, reaching USD 2.06 billion by 2030. This growth is driven by advancements in generative AI and the increasing digitalization and automation across various industries. Prompt engineering, which involves designing and optimizing prompts to obtain relevant and accurate responses from large language models (LLMs), is becoming essential in fields such as healthcare, banking, financial services, insurance, and retail. The integration of AI technologies, particularly Natural Language Processing (NLP), is boosting demand for prompt engineering tools and services. Additionally, government investments in AI research are expected to positively impact the market. Prompt engineering helps improve AI model performance, reduce biases, and enhance user experience by guiding AI models to focus on specific tasks.

Generative AI technologies are continually evolving, with advancements in natural language processing, machine learning, and data analysis. As these technologies become more sophisticated, the need for effective prompt engineering will continue to grow. Future developments may include enhanced contextual understanding, improved response accuracy, and greater integration into various applications.

The rise of Generative AI tools underscores the increasing importance of prompt engineering as a key skill. Professionals across different sectors will need to master prompt crafting to leverage AI technologies effectively. The ability to create precise and contextually appropriate prompts will become a valuable asset in optimizing AI interactions and achieving desired outcomes.

As the demand for prompt engineering roles grows, it is important to highlight how prompt engineering is becoming a fundamental skill across a wide range of tech and white-collar jobs. In the tech industry, professionals such as software developers, data scientists, and product managers increasingly rely on prompt engineering to optimize AI functionalities. For instance, developers need to craft effective prompts to ensure that AI models integrate seamlessly with applications, while data scientists use prompts to extract accurate insights from AI systems. Product managers leverage well-designed prompts to align AI features with user needs and business goals.

In white-collar sectors, the significance of prompt engineering is equally profound. Marketing and communications professionals utilize AI tools for generating content and conducting market analysis, necessitating skillful prompt design to achieve relevant and impactful results. Human resources teams employ AI for tasks like resume screening and employee feedback, requiring precise prompts to ensure fair and unbiased evaluations. Customer support representatives use AI-driven chatbots, making effective prompt crafting essential for delivering accurate and satisfactory responses.

As AI technology becomes integral to diverse job functions, mastering prompt engineering is crucial for enhancing efficiency, accuracy, and fairness, making it a valuable skill across various professional fields.

As generative AI technologies continue to evolve, the role of prompt engineering becomes increasingly pivotal in optimizing their interactions and outputs. Mastering the craft of prompt engineering is essential for users aiming to harness the full potential of AI models. This skill involves designing prompts that are both precise and contextually relevant, thereby guiding AI systems to generate responses that are accurate, useful, and aligned with user intent. A deep understanding of the technical aspects of AI models, such as transformer architectures and tokenization processes, is fundamental for effective prompt engineering. These models’ capabilities and limitations shape how prompts should be crafted to achieve the best results. For instance, being aware of token constraints and knowledge cutoffs allows users to frame their queries in a manner that maximizes the relevance and coherence of AI-generated responses. The iterative refinement process comprising trial and error along with feedback loops is crucial for developing effective prompts. By continuously testing and adjusting prompts based on the responses received, users can fine-tune their queries to enhance the quality and precision of AI outputs. Additionally, addressing issues of bias and fairness in prompt design is vital for ensuring that AI systems produce equitable and unbiased results, reflecting a commitment to ethical considerations and inclusivity. Security concerns, such as prompt injection attacks and data privacy, further underscore the importance of meticulous prompt engineering. Safeguarding AI systems against malicious manipulations and protecting sensitive data are essential for maintaining the integrity and reliability of AI applications. Looking forward, as generative AI technologies become more advanced and integrated into various industries, the demand for sophisticated prompt engineering skills will grow. Professionals will need to cultivate expertise in crafting effective and ethical prompts to fully leverage these tools. The emergence of prompt engineering as a specialized skill highlights its crucial role in optimizing AI interactions and ensuring responsible deployment across diverse applications. Finally, prompt engineering extends beyond the formulation of queries; it encompasses a comprehensive understanding of AI technologies and the ethical management of their outputs. By mastering prompt engineering, users can unlock the transformative potential of generative AI, addressing the challenges of bias, fairness, security, and privacy while driving innovative and impactful applications [19-27].