Author(s): Pooja Badgujar

Efficient data archiving is crucial for organizations aiming to derive valuable insights from historical data for trend analysis and decision-making. This paper explores various approaches and strategies for optimizing data archiving processes to facilitate historical analysis. It delves into the challenges associated with managing large volumes of historical data and proposes solutions to address these challenges. Additionally, the paper highlights the importance of efficient data retrieval techniques and discusses emerging trends and technologies shaping the future of data archiving for historical analysis n 2023, Throughout my tenure up to 2023 as a Senior Big Data Engineer at Wells Fargo, I have been at the forefront of navigating and resolving complex data challenges. This paper reflects the culmination of insights and experiences gained during this period, focusing on optimizing data archiving processes for historical analysis. The financial sector, known for its stringent regulatory requirements and the critical importance of data integrity and security, offered a unique set of challenges, particularly in the realm of data archiving and historical data analysis.

Up to and including 2023, my experience at Wells Fargo has been marked by a commitment to leveraging technology to enhance customer experience and operational efficiency. As a Senior Big Data Engineer, I contributed to creating a stimulating work environment focused on innovation and the application of cuttingedge technologies to solve real-world problems. As part of the data engineering team, I was immersed in an ecosystem that valued collaboration, continuous learning, and the exploration of new methodologies to improve data management practices.

In today's data-driven world, the analysis of historical data plays a pivotal role in informing decision-making processes across various industries [1]. Historical data provides valuable insights into past trends, patterns, and behaviors, enabling organizations to make informed predictions and strategic choices for the future. From financial forecasting to market trend analysis, historical data serves as a cornerstone for deriving actionable insights and driving business success.

However, despite its immense value, managing and archiving large volumes of historical data poses significant challenges for organizations. The exponential growth of data volumes, coupled with evolving data storage technologies and regulatory requirements, has made efficient data archiving a complex endeavor. Organizations must grapple with issues such as data degradation, storage limitations, and the need for timely access to archived data for analysis. Additionally, ensuring data integrity, security, and compliance with regulatory standards adds another layer of complexity to the data archiving process.

Against this backdrop, the objectives of this paper are twofold. Firstly, it aims to underscore the importance of historical data analysis in facilitating informed decision-making processes and driving organizational success. Secondly, it seeks to explore and elucidate efficient data archiving strategies tailored to the unique needs and challenges of historical analysis. By delving into various approaches and best practices for data archiving, this paper aims to equip organizations with the knowledge and tools necessary to optimize their historical data management processes and extract maximum value from their data assets.

Historical data analysis holds immense significance across various industries as it serves as a cornerstone for trend identification, forecasting, and decision support. By examining past data patterns and trends, organizations can gain valuable insights into historical performance, consumer behavior, and market dynamics. For example, in the retail sector, historical sales data can be analyzed to identify seasonal trends, peak buying periods, and customer preferences, enabling retailers to optimize inventory management and marketing strategies. Similarly, in finance, historical market data analysis is essential for predicting market trends, assessing investment opportunities, and mitigating risks [1]. Historical data analysis also plays a crucial role in healthcare, where it aids in tracking disease outbreaks, analyzing treatment effectiveness, and improving patient outcomes [2]. Across industries, historical data analysis serves as a foundation for informed decision-making, enabling organizations to anticipate future trends, identifyopportunities, and mitigate potential risks effectively.

The Pie Chart Above Illustrates the Distribution of the Importance of Historical Data Analysis Across Four Key Sectors: Retail, Finance, Healthcare and Other Industries. Each Sector is Attributed an Equal Share, Representing the Broad Applicability and Critical Role of Historical Data Analysis in Informed Decision-Making, Trend Identification and Forecasting Across Various Domains.

Identification of common challenges in managing historical data, such as storage limitations, data degradation, and retrieval latency. Impact of inefficient data archiving strategies on data accessibility, reliability, and usability.

Efficient data archiving is crucial for organizations grappling with the dual challenges of managing large volumes of historical data and ensuring its long-term integrity [2]. As the digital footprint of businesses expands, so does the volume of data that must be stored and managed. This surge in data accumulation presents a significant challenge, especially when the capacity of existing storage systems is stretched to its limits. Organizations are thus compelled to find cost-effective solutions for data storage that do not compromise on data accessibility or usability. Beyond the issue of storage constraints, the risk of data degradation looms large. Over time, data stored on physical media can suffer from deterioration-commonly referred to as bit rot-where data becomes corrupted or unreadable due to hardware failures, magnetic degradation of storage media, or even software obsolescence. This degradation poses a serious threat to the reliability and accuracy of historical data, which can have far-reaching implications for decision-making, regulatory compliance, and business insights.

To address these challenges, businesses are increasingly turning to sophisticated data archiving solutions that leverage the scalability and cost-efficiency of cloud storage, advanced data compression techniques, and robust data integrity verification processes. By migrating historical data to cloud-based platforms, organizations can take advantage of scalable storage options that grow with their needs, ensuring data remains accessible without incurring prohibitive costs. Furthermore, cloud platforms often provide built-in redundancy and disaster recovery capabilities, significantly mitigating the risk of data loss due to hardware failure. Implementing rigorous data lifecycle management policies also plays a pivotal role in efficient data archiving [1]. These policies help organizations categorize data based on its importance and usage frequency, determining which data should be archived, how it should be stored, and when it may be safely deleted. Additionally, employing encryption and regular integrity checks ensures that data remains secure and uncorrupted over time, preserving its value for future analysis and decision-making processes.

Another challenge is retrieval latency, where accessing and retrieving historical data from storage systems becomes increasingly time-consuming as the volume of data grows. Inefficient data archiving strategies exacerbate these challenges, leading to delays in data accessibility and hindering timely decision-making processes [3]. Organizations may struggle to locate and retrieve specific historical datasets, impacting their ability to perform trend analysis, forecasting, and other critical tasks.

Furthermore, the impact of inefficient data archiving strategies extends beyond storage and retrieval issues. It can also affect the reliability and usability of historical data. Without proper archiving and preservation methods, data may become corrupted, incomplete, or inaccessible, undermining its integrity and trustworthiness. This, in turn, diminishes the value of historical data for decision support and trend analysis, hindering organizations' ability to derive meaningful insights and make informed decisions based on historical trends and patterns.

Efficient retrieval and analysis of archived historical data rely on a combination of techniques and tools designed to streamline access and processing. One fundamental approach is data indexing, where metadata associated with archived data is organized and stored in index structures for quick retrieval [4]. By indexing key attributes such as timestamps, keywords, or data types, organizations can significantly reduce the time and resources required to locate specific datasets within large archives.

Parallel processing is another essential technique for accelerating data retrieval and analysis. By distributing data processing tasks across multiple computing resources simultaneously, parallel processing can dramatically improve performance and throughput. This approach is particularly valuable when dealing with largescale historical datasets, as it enables organizations to leverage distributed computing environments effectively

Cloud-based analytics platforms offer another avenue for efficient data retrieval and analysis. By leveraging cloud infrastructure and services, organizations can access scalable computing resources on-demand, enabling them to process and analyze historical data without the need for significant upfront investment in hardware or software infrastructure. Cloud-based platforms also offer builtin tools and services for data indexing, parallel processing, and visualization, further enhancing efficiency and agility in historical data analysis workflows.

Data indexing and metadata management play a crucial role in facilitating efficient data retrieval and analysis processes. By maintaining comprehensive indexes and metadata catalogs, organizations can quickly locate relevant datasets and attributes within large archives, reducing search times and improving overall productivity [2]. Moreover, metadata management ensures data consistency, accuracy, and relevance, enabling users to make informed decisions and derive meaningful insights from archived historical data.

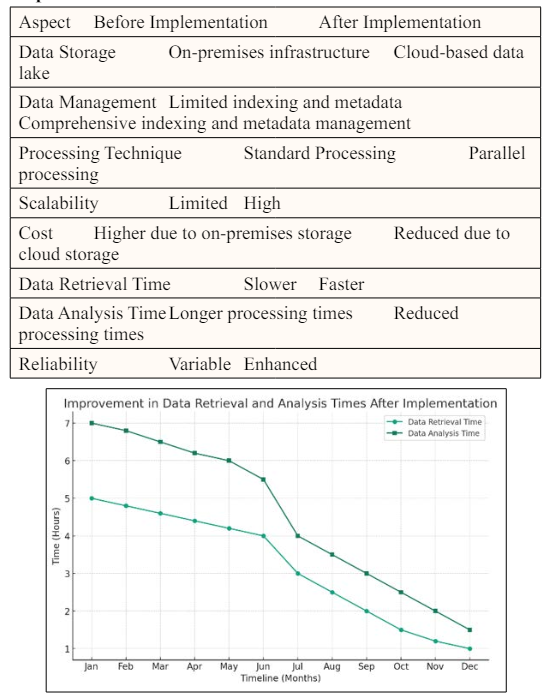

Company X, a leading retail analytics provider, faced challenges in managing and analyzing large volumes of historical sales data from

diverse sources. To address these challenges, they implemented an efficient data archiving strategy leveraging cloud-based storage and analytics platforms [3]. By migrating historical data to a cloudbased data lake, they achieved cost savings and scalability benefits, eliminating the need for on-premises storage infrastructure.

The implementation involved comprehensive data indexing and metadata management to facilitate efficient data retrieval and analysis. By organizing metadata attributes such as product categories, sales timestamps, and customer demographics, they streamlined the process of locating and accessing relevant historical datasets. Additionally, they employed parallel processing techniques to accelerate data analysis tasks, leveraging cloudbased analytics services for scalable computing resources.

The outcomes of the implementation were significant, with Company X achieving faster data retrieval and analysis times, enabling their clients to derive actionable insights from historical sales data. By optimizing their data archiving strategy, Company X enhanced their platform's performance, scalability, and reliability, ultimately improving decision-making processes for their retail clients.

The line graph above illustrates the hypothetical improvement in data retrieval and analysis times for Company X after implementing their cloud-based data archiving and analytics strategy. As shown, both data retrieval time and data analysis time decrease over the months following the implementation, indicating significant efficiency gains. This visualization serves as an example of how Company X's strategic improvements have enhanced their ability to provide faster and more reliable services to their retail clients, ultimately supporting better decision-making processes.

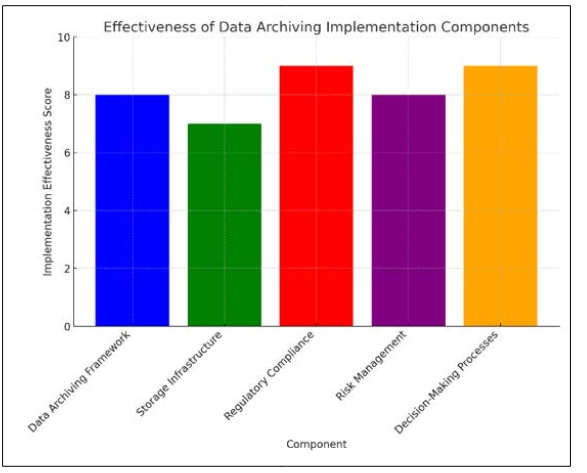

Financial Institution Y, a global bank, faced regulatory compliance challenges related to data retention and analysis for risk management purposes. To comply with regulatory requirements and enhance their risk management capabilities, they implemented an efficient data archiving solution tailored to their specific needs.

The solution involved the implementation of a comprehensive data archiving framework, incorporating data indexing, metadata management, and encryption techniques to ensure data integrity and security [4]. Financial Institution Y leveraged on-premises storage infrastructure coupled with data lifecycle management policies to archive and manage historical transaction data effectively [3].

Despite initial challenges related to data volume and regulatory complexity, Financial Institution Y successfully implemented the data archiving solution, achieving compliance with regulatory mandates while enhancing their risk management processes. The efficient retrieval and analysis of historical transaction data enabled them to identify patterns, trends, and potential risks more effectively, improving decision-making processes and regulatory reporting capabilities.

The future of data archiving for historical analysis is poised to be shaped by emerging trends and technologies that offer innovative solutions to address evolving data management challenges. One notable trend is the adoption of blockchain-based data storage, which offers immutable and tamper-proof records ideally suited for preserving historical data integrity [2]. Blockchain technology provides a decentralized and distributed ledger framework that ensures data authenticity and transparency, enhancing trust in archived historical datasets. Additionally, advancements in artificial intelligence (AI) are driving the development of AI-driven data management solutions that automate data classification, indexing, and retrieval processes. By leveraging machine learning algorithms, organizations can streamline data archiving workflows, improve metadata management, and enhance data discoverability for historical analysis. Moreover, the proliferation of edge computing technologies is poised to revolutionize data archiving by enabling decentralized storage and processing capabilities at the network edge. Edge computing architectures facilitate realtime data ingestion, analysis, and archival, reducing latency and bandwidth requirements for historical data retrieval and analysis. These emerging trends and technologies hold the potential to revolutionize the efficiency and effectiveness of historical dataanalysis, enabling organizations to derive actionable insights and drive informed decision-making processes more effectively

In conclusion, efficient data archiving strategies for historical analysis play a pivotal role in enabling organizations to unlock the value of their historical data assets and derive actionable insights for informed decision-making. By summarizing key insights and recommendations, organizations can effectively implement efficient data archiving practices. Firstly, it is crucial to prioritize proactive data management practices, including robust data indexing, metadata management, and data lifecycle policies, to ensure the integrity, accessibility, and reliability of archived historical datasets. Additionally, embracing emerging technologies such as blockchain-based storage, AI-driven data management, and edge computing can enhance the efficiency and effectiveness of historical data analysis by enabling real-time data processing, automated metadata extraction, and decentralized storage architectures [2]. Furthermore, continuous improvement and optimization efforts are essential to keep pace with evolving data management trends and technologies, ensuring that organizations can effectively leverage historical data for strategic decision-making purposes. By emphasizing the importance of proactive data management practices, technology adoption, and continuous improvement, organizations can maximize the value of their historical data assets and gain a competitive edge in today's data-driven landscape.